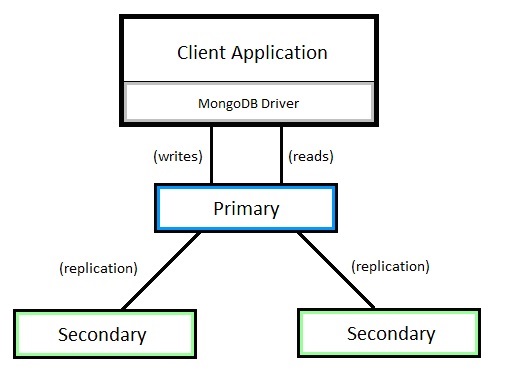

A Replica set is a

group of servers with one primary, the server taking client requests, and

multiple secondary's, servers that keep copies of the primary's data.

If the primary

crashes, the secondaries can elect a new primary from amongst themselves.

if your are using

replication and a server goes down, you can still access your data from the

other servers in the set. If the data on a server is damaged or inaccessible,

you can make a copy of the data from one the other members of the set.

Replication Configuration:

set the environment variable for mongodb services in root user.

[root@mongodb4 ~]# cat .bash_profile

# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

PATH=$PATH:$HOME/bin

export PATH

export JAVA_HOME=/opt/jdk1.8.0_162

export JRE_HOME=/opt/jdk1.8.0_162/jre

export

PATH=$PATH:/opt/jdk1.8.0_162/bin:/opt/jdk1.8.0_162/jre/bin

export

MONGODB_HOME=/yis/mdashok/mongodb_home/mongodb-linux-x86_64-enterprise-rhel62-4.0.0

export PATH=$PATH:$MONGODB_HOME/bin

[root@mongodb4 ~]#

$mkdir -p /usr/server1/mongodb

$mkdir -p /usr/server2/mongodb

$chmod -R 777 /usr/server1

$chmod -R 777 /usr/server2

$chmod -R 777 /usr/server3

Create data and log directories in server1,2 and 3 folders.

$cd /usr/server1/mongodb

mkdir data log

--

$cd /usr/server2/mongodb

mkdir data log

$cd /usr/server3/mongodb

mkdir data log

Starting Mongod

service in Server 1 : Primary Server:

[root@mongodb4 /]# more /usr/server1/mongodb/mongodb.conf

systemLog:

destination: file

path:

"/usr/server1/mongodb/log/mongodb.log"

logAppend: true

processManagement:

fork: true

net:

bindIp: 127.0.0.1

port: 6666

storage:

dbPath:

"/usr/server1/mongodb/data"

replication:

replSetName:

"OurReplica"

[root@mongodb4 /]#

[root@nosqlDB mongodb]# mongod

-f mongodb.conf

2018-09-14T11:35:21.149+0530 I CONTROL [main] Automatically disabling TLS 1.0, to

force-enable TLS 1.0 specify --sslDisabledProtocols 'none'

about to fork child process, waiting until server is ready

for connections.

forked process: 2607

child process started

successfully, parent exiting

Starting Mongod

service in Server 2: Secondary Server

[root@nosqlDB mongodb]# pwd

/usr/server2/mongodb

[root@nosqlDB mongodb]#

[root@nosqlDB mongodb]# cat

mongodb.conf

systemLog:

destination: file

path:

"/usr/server2/mongodb/log/mongodb.log"

logAppend: true

processManagement:

fork: true

net:

bindIp: 127.0.0.1

port: 7777

storage:

dbPath:

"/usr/server2/mongodb/data"

replication:

replSetName:

"OurReplica"

[root@nosqlDB mongodb]#

[root@nosqlDB mongodb]# date

Fri Sep 14 11:39:28

IST 2018

[root@nosqlDB mongodb]# pwd

/usr/server2/mongodb

[root@nosqlDB mongodb]# ls -ltr

total 16

drwxr-xr-x. 2 mdashok mongodb 4096 Aug 28 11:59 data

drwxr-xr-x. 2 mdashok mongodb 4096 Aug 28 11:59 log

-rwxrwxrwx. 1 mdashok mongodb 303 Aug 28 14:36 mongodb.conf.old

-rwxrwxrwx. 1 mdashok mongodb 264 Sep 14 11:34 mongodb.conf

[root@nosqlDB mongodb]# mongod

-f mongodb.conf

2018-09-14T11:39:33.403+0530 I CONTROL [main] Automatically disabling TLS 1.0, to

force-enable TLS 1.0 specify --sslDisabledProtocols 'none'

about to fork child process, waiting until server is ready

for connections.

forked process: 2686

child process started

successfully, parent exiting

[root@nosqlDB mongodb]#

Starting Mongod service

in Server 3 (Arbiter) #

[root@nosqlDB mongodb]# date

Fri Sep 14 11:42:11 IST 2018

[root@nosqlDB mongodb]# pwd

/usr/server3/mongodb

[root@nosqlDB mongodb]# ls -tlr

total 16

drwxr-xr-x. 2 mdashok mongodb 4096 Aug 28 12:00 data

drwxr-xr-x. 2 mdashok mongodb 4096 Aug 28 12:00 log

-rwxrwxrwx. 1 mdashok mongodb 303 Aug 28 14:36 mongodb.conf.bkp

-rwxrwxrwx. 1 mdashok mongodb 265 Sep 14 11:34 mongodb.conf

[root@nosqlDB mongodb]# mongod -f mongodb.conf

2018-09-14T11:42:17.453+0530 I CONTROL [main] Automatically disabling TLS 1.0, to

force-enable TLS 1.0 specify --sslDisabledProtocols 'none'

about to fork child process, waiting until server is ready

for connections.

forked process: 2738

child process started

successfully, parent exiting

[root@nosqlDB mongodb]#

Connecting to Server 1

[root@nosqlDB ~]# date

Fri Sep 14 11:44:57

IST 2018

[root@nosqlDB ~]# cd /usr/server1/mongodb/

[root@nosqlDB mongodb]# pwd

/usr/server1/mongodb

[root@nosqlDB mongodb]# mongo

--port 6666

MongoDB shell version v4.0.0

connecting to: mongodb://127.0.0.1:6666/

MongoDB server version: 4.0.0

Server has startup warnings:

2018-09-14T11:35:21.161+0530 I STORAGE [initandlisten]

2018-09-14T11:35:21.161+0530 I STORAGE [initandlisten] ** WARNING: Using the XFS

filesystem is strongly recommended with the WiredTiger storage engine

2018-09-14T11:35:21.161+0530 I STORAGE [initandlisten] ** See

http://dochub.mongodb.org/core/prodnotes-filesystem

2018-09-14T11:35:21.965+0530 I CONTROL [initandlisten]

2018-09-14T11:35:21.965+0530 I CONTROL [initandlisten] ** WARNING: Access control is

not enabled for the database.

2018-09-14T11:35:21.965+0530 I CONTROL [initandlisten] ** Read and write access to data and

configuration is unrestricted.

2018-09-14T11:35:21.965+0530 I CONTROL [initandlisten] ** WARNING: You are running

this process as the root user, which is not recommended.

2018-09-14T11:35:21.965+0530 I CONTROL [initandlisten]

2018-09-14T11:35:21.966+0530 I CONTROL [initandlisten]

2018-09-14T11:35:21.966+0530 I CONTROL [initandlisten] ** WARNING:

/sys/kernel/mm/transparent_hugepage/enabled is 'always'.

2018-09-14T11:35:21.966+0530 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2018-09-14T11:35:21.966+0530 I CONTROL [initandlisten]

2018-09-14T11:35:21.966+0530 I CONTROL [initandlisten] ** WARNING:

/sys/kernel/mm/transparent_hugepage/defrag is 'always'.

2018-09-14T11:35:21.966+0530 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2018-09-14T11:35:21.966+0530 I CONTROL [initandlisten]

MongoDB Enterprise >

Connecting to Server 2

[root@nosqlDB mongodb]# date

Fri Sep 14 11:47:14 IST 2018

[root@nosqlDB mongodb]# pwd

/usr/server2/mongodb

[root@nosqlDB mongodb]# mongo

--port 7777

MongoDB shell version v4.0.0

connecting to: mongodb://127.0.0.1:7777/

MongoDB server version: 4.0.0

Server has startup warnings:

2018-09-14T11:39:33.439+0530 I STORAGE [initandlisten]

2018-09-14T11:39:33.439+0530 I STORAGE [initandlisten] ** WARNING: Using the XFS

filesystem is strongly recommended with the WiredTiger storage engine

2018-09-14T11:39:33.439+0530 I STORAGE [initandlisten] ** See

http://dochub.mongodb.org/core/prodnotes-filesystem

2018-09-14T11:39:34.302+0530 I CONTROL [initandlisten]

2018-09-14T11:39:34.302+0530 I CONTROL [initandlisten] ** WARNING: Access control is

not enabled for the database.

2018-09-14T11:39:34.302+0530 I CONTROL [initandlisten] ** Read and write access to data and

configuration is unrestricted.

2018-09-14T11:39:34.302+0530 I CONTROL [initandlisten] ** WARNING: You are running

this process as the root user, which is not recommended.

2018-09-14T11:39:34.302+0530 I CONTROL [initandlisten]

2018-09-14T11:39:34.303+0530 I CONTROL [initandlisten]

2018-09-14T11:39:34.303+0530 I CONTROL [initandlisten] ** WARNING:

/sys/kernel/mm/transparent_hugepage/enabled is 'always'.

2018-09-14T11:39:34.303+0530 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2018-09-14T11:39:34.303+0530 I CONTROL [initandlisten]

2018-09-14T11:39:34.303+0530 I CONTROL [initandlisten] ** WARNING:

/sys/kernel/mm/transparent_hugepage/defrag is 'always'.

2018-09-14T11:39:34.303+0530 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2018-09-14T11:39:34.303+0530 I CONTROL [initandlisten]

MongoDB Enterprise >

Connecting to Server 3

[root@nosqlDB mongodb]# date

Fri Sep 14 11:49:05 IST 2018

[root@nosqlDB mongodb]# pwd

/usr/server3/mongodb

[root@nosqlDB mongodb]# mongo

--port 8888

MongoDB shell version v4.0.0

connecting to: mongodb://127.0.0.1:8888/

MongoDB server version: 4.0.0

Server has startup warnings:

2018-09-14T11:42:17.473+0530 I STORAGE [initandlisten]

2018-09-14T11:42:17.473+0530 I STORAGE [initandlisten] ** WARNING: Using the XFS

filesystem is strongly recommended with the WiredTiger storage engine

2018-09-14T11:42:17.473+0530 I STORAGE [initandlisten] ** See

http://dochub.mongodb.org/core/prodnotes-filesystem

2018-09-14T11:42:18.284+0530 I CONTROL [initandlisten]

2018-09-14T11:42:18.284+0530 I CONTROL [initandlisten] ** WARNING: Access control is

not enabled for the database.

2018-09-14T11:42:18.284+0530 I CONTROL [initandlisten] ** Read and write access to data and

configuration is unrestricted.

2018-09-14T11:42:18.284+0530 I CONTROL [initandlisten] ** WARNING: You are running

this process as the root user, which is not recommended.

2018-09-14T11:42:18.284+0530 I CONTROL [initandlisten]

2018-09-14T11:42:18.284+0530 I CONTROL [initandlisten]

2018-09-14T11:42:18.284+0530 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/enabled

is 'always'.

2018-09-14T11:42:18.284+0530 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2018-09-14T11:42:18.284+0530 I CONTROL [initandlisten]

2018-09-14T11:42:18.284+0530 I CONTROL [initandlisten] ** WARNING:

/sys/kernel/mm/transparent_hugepage/defrag is 'always'.

2018-09-14T11:42:18.284+0530 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2018-09-14T11:42:18.284+0530 I CONTROL [initandlisten]

MongoDB Enterprise >

Initiate Server1 as

Primary Server

MongoDB Enterprise

> db.version();

4.0.0

MongoDB Enterprise

>

MongoDB Enterprise > rs.initiate()

{

"info2" : "no configuration specified. Using a default

configuration for the set",

"me"

: "127.0.0.1:6666",

"ok"

: 1,

"operationTime" : Timestamp(1536909735, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1536909735, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" :

NumberLong(0)

}

}

}

MongoDB Enterprise OurReplica:OTHER>

Default it is connected to local host : 6666. i.e. primary

server.

Here we can see replication set name: OurReplica .

OTHER: Because still it is not aware that is belong to which

database.

MongoDB Enterprise OurReplica:OTHER> show dbs

admin 0.000GB

config 0.000GB

local 0.000GB

MongoDB Enterprise OurReplica:PRIMARY>

Check the current db connected from Primary Server

MongoDB Enterprise OurReplica:PRIMARY> db

test

MongoDB Enterprise OurReplica:PRIMARY>

Verify the

Replication Status from Primary Server

MongoDB Enterprise OurReplica:PRIMARY> rs.status();

{

"set" : "OurReplica",

"date" : ISODate("2018-09-14T07:24:58.030Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1536909896, 1),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1536909896, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1536909896, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1536909896, 1),

"t" : NumberLong(1)

}

},

"lastStableCheckpointTimestamp" : Timestamp(1536909856, 1),

"members" : [

{

"_id" : 0,

"name" : "127.0.0.1:6666",

"health" : 1,

"state" : 1,

"stateStr" :

"PRIMARY",

"uptime" : 4777,

"optime" : {

"ts" :

Timestamp(1536909896, 1),

"t" : NumberLong(1)

},

"optimeDate"

: ISODate("2018-09-14T07:24:56Z"),

"syncingTo"

: "",

"syncSourceHost" :

"",

"syncSourceId" : -1,

"infoMessage" : "",

"electionTime" : Timestamp(1536909735, 2),

"electionDate" : ISODate("2018-09-14T07:22:15Z"),

"configVersion" : 1,

"self" : true,

"lastHeartbeatMessage" : ""

}

],

"ok"

: 1,

"operationTime" : Timestamp(1536909896, 1),

"$clusterTime"

: {

"clusterTime" : Timestamp(1536909896, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

MongoDB Enterprise OurReplica:PRIMARY>

Verify the

Replication Configuration from Primary Server

MongoDB Enterprise OurReplica:PRIMARY> rs.conf();

{

"_id" : "OurReplica",

"version" : 1,

"protocolVersion" : NumberLong(1),

"writeConcernMajorityJournalDefault" : true,

"members" : [

{

"_id" : 0,

"host" :

"127.0.0.1:6666",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

}

],

"settings" : {

"chainingAllowed" : true,

"heartbeatIntervalMillis" : 2000,

"heartbeatTimeoutSecs" : 10,

"electionTimeoutMillis" : 10000,

"catchUpTimeoutMillis" : -1,

"catchUpTakeoverDelayMillis" : 30000,

"getLastErrorModes" : {

},

"getLastErrorDefaults" : {

"w" : 1,

"wtimeout" : 0

},

"replicaSetId" :

ObjectId("5b9b61a67b1454a13fc7610a")

}

}

MongoDB Enterprise OurReplica:PRIMARY>

host" :

"127.0.0.1:6666" -> we are using localhost for replication. If

we go for real configuration then server name should be different and port

number may/may not be same.

arbiterOnly" :

false -> No. It is not arbiter.

members: We have

only 1 member (6666)

Add first Secondary

Server from Primary Server

MongoDB Enterprise OurReplica:PRIMARY> rs.add("127.0.0.1:7777");

{

"ok"

: 1,

"operationTime" : Timestamp(1536910836, 2),

"$clusterTime" : {

"clusterTime" : Timestamp(1536910836, 2),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

MongoDB Enterprise OurReplica:PRIMARY>

Add second Secondary

Server2 from Primary Server

rs.add("127.0.0.1:8888");

MongoDB Enterprise OurReplica:PRIMARY> rs.add("127.0.0.1:8888");

{

"ok"

: 1,

"operationTime" : Timestamp(1536911008, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1536911008, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

MongoDB Enterprise

OurReplica:PRIMARY>

Verify the

Replication configuration from primary server

our Replica: PRIMARY>rs.conf()

MongoDB Enterprise OurReplica:PRIMARY> rs.conf()

{

"_id" : "OurReplica",

"version" : 3,

"protocolVersion" : NumberLong(1),

"writeConcernMajorityJournalDefault" : true,

"members" : [

{

"_id" : 0,

"host" :

"127.0.0.1:6666",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 1,

"host" :

"127.0.0.1:7777",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 2,

"host" :

"127.0.0.1:8888",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

}

],

"settings" : {

"chainingAllowed" : true,

"heartbeatIntervalMillis" : 2000,

"heartbeatTimeoutSecs" : 10,

"electionTimeoutMillis" : 10000,

"catchUpTimeoutMillis" : -1,

"catchUpTakeoverDelayMillis" : 30000,

"getLastErrorModes" : {

},

"getLastErrorDefaults" : {

"w" : 1,

"wtimeout" : 0

},

"replicaSetId" :

ObjectId("5b9b61a67b1454a13fc7610a")

}

}

MongoDB Enterprise

OurReplica:PRIMARY>

Verify the

replication status from primary server

MongoDB Enterprise

OurReplica:PRIMARY> rs.status();

{

"set" : "OurReplica",

"date" : ISODate("2018-09-14T08:00:47.253Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1536912046, 1),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" :

Timestamp(1536912046, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1536912046, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1536912046, 1),

"t" : NumberLong(1)

}

},

"lastStableCheckpointTimestamp" : Timestamp(1536912016, 1),

"members"

: [

{

"_id" : 0,

"name" : "127.0.0.1:6666",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 6926,

"optime" : {

"ts"

: Timestamp(1536912046, 1),

"t" : NumberLong(1)

},

"optimeDate"

: ISODate("2018-09-14T08:00:46Z"),

"syncingTo"

: "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"electionTime" : Timestamp(1536909735, 2),

"electionDate" : ISODate("2018-09-14T07:22:15Z"),

"configVersion" : 3,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 1,

"name" : "127.0.0.1:7777",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 1210,

"optime" : {

"ts"

: Timestamp(1536912046, 1),

"t" :

NumberLong(1)

},

"optimeDurable" : {

"ts"

: Timestamp(1536912046, 1),

"t" :

NumberLong(1)

},

"optimeDate" : ISODate("2018-09-14T08:00:46Z"),

"optimeDurableDate" : ISODate("2018-09-14T08:00:46Z"),

"lastHeartbeat" :

ISODate("2018-09-14T08:00:46.923Z"),

"lastHeartbeatRecv" :

ISODate("2018-09-14T08:00:45.986Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "127.0.0.1:8888",

"syncSourceHost" : "127.0.0.1:8888",

"syncSourceId" : 2,

"infoMessage" : "",

"configVersion" : 3

},

{

"_id" : 2,

"name" : "127.0.0.1:8888",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 1038,

"optime" : {

"ts"

: Timestamp(1536912046, 1),

"t" :

NumberLong(1)

},

"optimeDurable" : {

"ts"

: Timestamp(1536912046, 1),

"t" :

NumberLong(1)

},

"optimeDate" : ISODate("2018-09-14T08:00:46Z"),

"optimeDurableDate" :

ISODate("2018-09-14T08:00:46Z"),

"lastHeartbeat" :

ISODate("2018-09-14T08:00:46.923Z"),

"lastHeartbeatRecv" :

ISODate("2018-09-14T08:00:45.308Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "127.0.0.1:6666",

"syncSourceHost" : "127.0.0.1:6666",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 3

}

],

"ok"

: 1,

"operationTime" : Timestamp(1536912046, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1536912046, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

MongoDB Enterprise OurReplica:PRIMARY>

Verify the

Replication Status from Primary Server

MongoDB Enterprise OurReplica:PRIMARY> rs.status();

{

"set" : "OurReplica",

"date" : ISODate("2018-09-14T08:00:47.253Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1536912046, 1),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1536912046, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1536912046, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1536912046, 1),

"t" : NumberLong(1)

}

},

"lastStableCheckpointTimestamp"

: Timestamp(1536912016, 1),

"members" : [

{

"_id" : 0,

"name" :

"127.0.0.1:6666",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 6926,

"optime" : {

"ts"

: Timestamp(1536912046, 1),

"t" : NumberLong(1)

},

"optimeDate"

: ISODate("2018-09-14T08:00:46Z"),

"syncingTo"

: "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"electionTime" : Timestamp(1536909735, 2),

"electionDate" : ISODate("2018-09-14T07:22:15Z"),

"configVersion" : 3,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 1,

"name" :

"127.0.0.1:7777",

"health" : 1,

"state" : 2,

"stateStr" :

"SECONDARY",

"uptime" : 1210,

"optime" : {

"ts"

: Timestamp(1536912046, 1),

"t" :

NumberLong(1)

},

"optimeDurable" : {

"ts"

: Timestamp(1536912046, 1),

"t" :

NumberLong(1)

},

"optimeDate" : ISODate("2018-09-14T08:00:46Z"),

"optimeDurableDate" :

ISODate("2018-09-14T08:00:46Z"),

"lastHeartbeat" :

ISODate("2018-09-14T08:00:46.923Z"),

"lastHeartbeatRecv" :

ISODate("2018-09-14T08:00:45.986Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "127.0.0.1:8888",

"syncSourceHost" : "127.0.0.1:8888",

"syncSourceId" : 2,

"infoMessage" : "",

"configVersion" : 3

},

{

"_id" : 2,

"name" :

"127.0.0.1:8888",

"health" : 1,

"state" : 2,

"stateStr" :

"SECONDARY",

"uptime" : 1038,

"optime" : {

"ts"

: Timestamp(1536912046, 1),

"t" :

NumberLong(1)

},

"optimeDurable" : {

"ts"

: Timestamp(1536912046, 1),

"t" :

NumberLong(1)

},

"optimeDate" : ISODate("2018-09-14T08:00:46Z"),

"optimeDurableDate" :

ISODate("2018-09-14T08:00:46Z"),

"lastHeartbeat" :

ISODate("2018-09-14T08:00:46.923Z"),

"lastHeartbeatRecv" : ISODate("2018-09-14T08:00:45.308Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "127.0.0.1:6666",

"syncSourceHost" : "127.0.0.1:6666",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 3

}

],

"ok"

: 1,

"operationTime" : Timestamp(1536912046, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1536912046, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

MongoDB Enterprise OurReplica:PRIMARY>

Remove a

Secondary Server from Primary Server

MongoDB Enterprise OurReplica:PRIMARY> rs.remove("127.0.0.1:8888");

{

"ok"

: 1,

"operationTime" : Timestamp(1536912221, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1536912221, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

MongoDB Enterprise OurReplica:PRIMARY>

Verify the

Replication configuration from primary server

MongoDB Enterprise OurReplica:PRIMARY> rs.conf();

{

"_id" : "OurReplica",

"version" : 4,

"protocolVersion" : NumberLong(1),

"writeConcernMajorityJournalDefault" : true,

"members" : [

{

"_id" : 0,

"host" : "127.0.0.1:6666",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 1,

"host" : "127.0.0.1:7777",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

}

],

"settings" : {

"chainingAllowed" : true,

"heartbeatIntervalMillis" : 2000,

"heartbeatTimeoutSecs" : 10,

"electionTimeoutMillis" : 10000,

"catchUpTimeoutMillis" : -1,

"catchUpTakeoverDelayMillis" : 30000,

"getLastErrorModes" : {

},

"getLastErrorDefaults" : {

"w" : 1,

"wtimeout" : 0

},

"replicaSetId" :

ObjectId("5b9b61a67b1454a13fc7610a")

}

}

MongoDB Enterprise OurReplica:PRIMARY>

Verify the

Replication Status from Primary Server

MongoDB Enterprise OurReplica:PRIMARY> rs.status();

{

"set" : "OurReplica",

"date" : ISODate("2018-09-14T08:04:56.627Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1536912296, 1),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1536912296, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1536912296, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1536912296, 1),

"t" : NumberLong(1)

}

},

"lastStableCheckpointTimestamp" : Timestamp(1536912256, 1),

"members" : [

{

"_id" : 0,

"name" :

"127.0.0.1:6666",

"health" : 1,

"state" : 1,

"stateStr" :

"PRIMARY",

"uptime" : 7175,

"optime" : {

"ts"

: Timestamp(1536912296, 1),

"t" : NumberLong(1)

},

"optimeDate"

: ISODate("2018-09-14T08:04:56Z"),

"syncingTo"

: "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"electionTime" : Timestamp(1536909735, 2),

"electionDate" : ISODate("2018-09-14T07:22:15Z"),

"configVersion" : 4,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 1,

"name" :

"127.0.0.1:7777",

"health" : 1,

"state" : 2,

"stateStr" :

"SECONDARY",

"uptime" : 1460,

"optime" : {

"ts"

: Timestamp(1536912286, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts"

: Timestamp(1536912286, 1),

"t" :

NumberLong(1)

},

"optimeDate" :

ISODate("2018-09-14T08:04:46Z"),

"optimeDurableDate" :

ISODate("2018-09-14T08:04:46Z"),

"lastHeartbeat" :

ISODate("2018-09-14T08:04:55.421Z"),

"lastHeartbeatRecv" :

ISODate("2018-09-14T08:04:54.922Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "127.0.0.1:6666",

"syncSourceHost" : "127.0.0.1:6666",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 4

}

],

"ok"

: 1,

"operationTime" : Timestamp(1536912296, 1),

"$clusterTime"

: {

"clusterTime" : Timestamp(1536912296, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

MongoDB Enterprise OurReplica:PRIMARY>

Add Arbiter Server

from Primary Server

MongoDB Enterprise OurReplica:PRIMARY> rs.addArb("127.0.0.1:8888");

{

"ok"

: 1,

"operationTime" : Timestamp(1536912367, 1),

"$clusterTime" : {

"clusterTime" :

Timestamp(1536912367, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

MongoDB Enterprise OurReplica:PRIMARY>

Verify the

Replication configuration from primary server

MongoDB Enterprise OurReplica:PRIMARY> rs.conf();

{

"_id" : "OurReplica",

"version" : 5,

"protocolVersion" : NumberLong(1),

"writeConcernMajorityJournalDefault" : true,

"members" : [

{

"_id" : 0,

"host" :

"127.0.0.1:6666",

"arbiterOnly"

: false,

"buildIndexes" : true,

"hidden" :

false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 1,

"host" :

"127.0.0.1:7777",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 2,

"host" :

"127.0.0.1:8888",

"arbiterOnly"

: true,

"buildIndexes" : true,

"hidden" : false,

"priority" : 0,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

}

],

"settings" : {

"chainingAllowed" : true,

"heartbeatIntervalMillis" : 2000,

"heartbeatTimeoutSecs" : 10,

"electionTimeoutMillis" : 10000,

"catchUpTimeoutMillis" : -1,

"catchUpTakeoverDelayMillis" : 30000,

"getLastErrorModes" : {

},

"getLastErrorDefaults"

: {

"w" : 1,

"wtimeout" : 0

},

"replicaSetId" :

ObjectId("5b9b61a67b1454a13fc7610a")

}

}

MongoDB Enterprise OurReplica:PRIMARY>

Verify the

Replication Status from Primary Server

MongoDB Enterprise OurReplica:PRIMARY> rs.status();

{

"set" : "OurReplica",

"date" : ISODate("2018-09-14T08:08:21.923Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncingTo"

: "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1536912496, 1),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1536912496, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1536912496, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1536912496, 1),

"t" : NumberLong(1)

}

},

"lastStableCheckpointTimestamp" : Timestamp(1536912496, 1),

"members" : [

{

"_id" : 0,

"name" :

"127.0.0.1:6666",

"health" : 1,

"state" : 1,

"stateStr" :

"PRIMARY",

"uptime" : 7380,

"optime" : {

"ts"

: Timestamp(1536912496, 1),

"t" : NumberLong(1)

},

"optimeDate"

: ISODate("2018-09-14T08:08:16Z"),

"syncingTo"

: "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"electionTime" : Timestamp(1536909735, 2),

"electionDate" : ISODate("2018-09-14T07:22:15Z"),

"configVersion" : 5,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 1,

"name" :

"127.0.0.1:7777",

"health" : 1,

"state"

: 2,

"stateStr" :

"SECONDARY",

"uptime" : 1665,

"optime" : {

"ts"

: Timestamp(1536912496, 1),

"t" :

NumberLong(1)

},

"optimeDurable" : {

"ts"

: Timestamp(1536912496, 1),

"t" :

NumberLong(1)

},

"optimeDate" : ISODate("2018-09-14T08:08:16Z"),

"optimeDurableDate" :

ISODate("2018-09-14T08:08:16Z"),

"lastHeartbeat" :

ISODate("2018-09-14T08:08:21.763Z"),

"lastHeartbeatRecv" : ISODate("2018-09-14T08:08:21.763Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "127.0.0.1:6666",

"syncSourceHost" : "127.0.0.1:6666",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 5

},

{

"_id" : 2,

"name" :

"127.0.0.1:8888",

"health" : 0,

"state" : 8,

"stateStr" : "(not reachable/healthy)",

"uptime" : 0,

"lastHeartbeat" :

ISODate("2018-09-14T08:08:21.115Z"),

"lastHeartbeatRecv" :

ISODate("2018-09-14T08:06:07.727Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "Error connecting to

127.0.0.1:8888 :: caused by :: Connection refused",

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"configVersion" : -1

}

],

"ok"

: 1,

"operationTime" :

Timestamp(1536912496, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1536912496, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

MongoDB Enterprise OurReplica:PRIMARY>

Verify the Primary

Server is Master Server

MongoDB Enterprise OurReplica:PRIMARY> db.isMaster();

{

"hosts" : [

"127.0.0.1:6666",

"127.0.0.1:7777"

],

"arbiters" : [

"127.0.0.1:8888"

],

"setName" : "OurReplica",

"setVersion" : 7,

"ismaster" : true,

"secondary" : false,

"primary" : "127.0.0.1:6666",

"me"

: "127.0.0.1:6666",

"electionId" : ObjectId("7fffffff0000000000000001"),

"lastWrite" : {

"opTime" : {

"ts" : Timestamp(1536912816, 1),

"t" : NumberLong(1)

},

"lastWriteDate" : ISODate("2018-09-14T08:13:36Z"),

"majorityOpTime" : {

"ts" : Timestamp(1536912816, 1),

"t" : NumberLong(1)

},

"majorityWriteDate" :

ISODate("2018-09-14T08:13:36Z")

},

"maxBsonObjectSize" : 16777216,

"maxMessageSizeBytes" : 48000000,

"maxWriteBatchSize" : 100000,

"localTime" : ISODate("2018-09-14T08:13:42.148Z"),

"logicalSessionTimeoutMinutes" :

30,

"minWireVersion" : 0,

"maxWireVersion" : 7,

"readOnly" : false,

"ok"

: 1,

"operationTime" : Timestamp(1536912816, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1536912816, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

MongoDB Enterprise

OurReplica:PRIMARY>

Note: Primary is read and write operation and Secondary

server is only for Read only purpose. Arbiter server is for election purpose

only.

Create Database from

Primary Server

[root@nosqlDB data]# pwd

/usr/server1/mongodb/data

[root@nosqlDB data]# ls -l

total 536

-rw-------. 1 root root

36864 Sep 14 13:41 collection-9--7051616006171547431.wt

drwx------. 2

root root 4096 Sep 14 13:45

diagnostic.data

-rw-------. 1

root root 16384 Sep 14 12:52

index-10--7051616006171547431.wt

-rw-------. 1

root root 16384 Sep 14 11:36

index-7--7051616006171547431.wt

drwx------. 2

root root 4096 Sep 14 11:35 journal

-rw-------. 1

root root 36864 Sep 14 12:56

_mdb_catalog.wt

-rw-------. 1

root root 5 Sep 14 11:35 mongod.lock

-rw-------. 1

root root 36864 Sep 14 13:45

sizeStorer.wt

-rw-------. 1

root root 114 Sep 14 11:35

storage.bson

-rw-------. 1

root root 46 Sep 14 11:35 WiredTiger

-rw-------. 1

root root 4096 Sep 14 11:35

WiredTigerLAS.wt

-rw-------. 1

root root 21 Sep 14 11:35

WiredTiger.lock

-rw-------. 1

root root 1049 Sep 14 13:45

WiredTiger.turtle

-rw-------. 1

root root 102400 Sep 14 13:45 WiredTiger.wt

[root@nosqlDB data]#

MongoDB Enterprise OurReplica:PRIMARY> show dbs

admin 0.000GB

config 0.000GB

local 0.000GB

MongoDB Enterprise OurReplica:PRIMARY> use RepDB

switched to db RepDB

MongoDB Enterprise OurReplica:PRIMARY> show collections

MongoDB Enterprise OurReplica:PRIMARY>

MongoDB Enterprise

OurReplica:PRIMARY> db.createCollection("First_rep_doc");

{

"ok"

: 1,

"operationTime" : Timestamp(1536913185, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1536913185, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

MongoDB Enterprise OurReplica:PRIMARY>

MongoDB Enterprise OurReplica:PRIMARY> show collections;

First_rep_doc

MongoDB Enterprise OurReplica:PRIMARY>

MongoDB Enterprise OurReplica:PRIMARY> db.createCollection("mysecond_rep_doc");

{

"ok"

: 1,

"operationTime" : Timestamp(1536913501, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1536913501, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

MongoDB Enterprise OurReplica:PRIMARY>

Connect to Secondary

Server and Verify

MongoDB Enterprise OurReplica:SECONDARY> db

test

MongoDB Enterprise OurReplica:SECONDARY> show dbs

2018-09-14T13:58:50.306+0530

E QUERY [js] Error: listDatabases

failed:{

"operationTime" : Timestamp(1536913726, 1),

"ok"

: 0,

"errmsg" : "not master and slaveOk=false",

"code" : 13435,

"codeName" :

"NotMasterNoSlaveOk",

"$clusterTime" : {

"clusterTime" : Timestamp(1536913726, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

} :

it is showing error here because it should act as either

master or slave. we should inform secondary server for read only purpose. so we

need to use here rs.slaveok();

MongoDB Enterprise OurReplica:SECONDARY> rs.slaveOk()

MongoDB Enterprise OurReplica:SECONDARY>

View Databases from Secondary Server

MongoDB Enterprise

OurReplica:SECONDARY> show dbs;

AshokDB 0.000GB

RepDB 0.000GB

admin 0.000GB

config 0.000GB

local 0.000GB

MongoDB Enterprise OurReplica:SECONDARY>

View Collection from

Secondary Server

MongoDB Enterprise OurReplica:SECONDARY> use RepDB

switched to db RepDB

MongoDB Enterprise OurReplica:SECONDARY> show

collections;

First_rep_doc

MongoDB Enterprise OurReplica:SECONDARY>

Server 3

rs.status() # Show the

error which occurred below,

due to the arbiter server port number is not

working and to resolve restarted

the services in Server 3 again.

"lastHeartbeatMessage"

: "Error connecting to 127.0.0.1:8888 :: caused by :: Connection

refused",

[root@nosqlDB mongodb]# mongod

-f mongodb.conf

2018-09-14T15:33:59.318+0530 I CONTROL [main] Automatically disabling TLS 1.0, to

force-enable TLS 1.0 specify --sslDisabledProtocols 'none'

about to fork child process, waiting until server is ready

for connections.

forked process: 4375

child process started successfully, parent exiting

[root@nosqlDB mongodb]# mongo

--port 8888

MongoDB shell version v4.0.0

connecting to: mongodb://127.0.0.1:8888/

MongoDB server version: 4.0.0

Server has startup warnings:

2018-09-14T15:33:59.335+0530 I STORAGE [initandlisten]

2018-09-14T15:33:59.335+0530 I STORAGE [initandlisten] ** WARNING: Using the XFS

filesystem is strongly recommended with the WiredTiger storage engine

2018-09-14T15:33:59.335+0530 I STORAGE [initandlisten] ** See

http://dochub.mongodb.org/core/prodnotes-filesystem

2018-09-14T15:34:00.812+0530 I CONTROL [initandlisten]

2018-09-14T15:34:00.812+0530 I CONTROL [initandlisten] ** WARNING: Access control is

not enabled for the database.

2018-09-14T15:34:00.812+0530 I CONTROL [initandlisten] ** Read and write access to data and

configuration is unrestricted.

2018-09-14T15:34:00.812+0530 I CONTROL [initandlisten] ** WARNING: You are running

this process as the root user, which is not recommended.

2018-09-14T15:34:00.812+0530 I CONTROL [initandlisten]

2018-09-14T15:34:00.813+0530 I CONTROL [initandlisten]

2018-09-14T15:34:00.813+0530 I CONTROL [initandlisten] ** WARNING:

/sys/kernel/mm/transparent_hugepage/enabled is 'always'.

2018-09-14T15:34:00.813+0530 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2018-09-14T15:34:00.813+0530 I CONTROL [initandlisten]

2018-09-14T15:34:00.813+0530 I CONTROL [initandlisten] ** WARNING:

/sys/kernel/mm/transparent_hugepage/defrag is 'always'.

2018-09-14T15:34:00.813+0530 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2018-09-14T15:34:00.813+0530 I CONTROL [initandlisten]

MongoDB Enterprise OurReplica:ARBITER>

Verify the

Replication Status from Primary Server

MongoDB Enterprise OurReplica:PRIMARY> rs.status();

{

"set" : "OurReplica",

"date" : ISODate("2018-09-14T10:04:39.781Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1536919477, 1),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime"

: {

"ts" : Timestamp(1536919477, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1536919477, 1),

"t" :

NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1536919477, 1),

"t" : NumberLong(1)

}

},

"lastStableCheckpointTimestamp" : Timestamp(1536919457, 1),

"members" : [

{

"_id" : 0,

"name" :

"127.0.0.1:6666",

"health" : 1,

"state" : 1,

"stateStr" :

"PRIMARY",

"uptime" : 14358,

"optime" : {

"ts"

: Timestamp(1536919477, 1),

"t" : NumberLong(1)

},

"optimeDate" :

ISODate("2018-09-14T10:04:37Z"),

"syncingTo"

: "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"electionTime" :

Timestamp(1536909735, 2),

"electionDate" : ISODate("2018-09-14T07:22:15Z"),

"configVersion" : 7,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 1,

"name" :

"127.0.0.1:7777",

"health" : 1,

"state" : 2,

"stateStr" :

"SECONDARY",

"uptime" : 8643,

"optime" : {

"ts"

: Timestamp(1536919477, 1),

"t" :

NumberLong(1)

},

"optimeDurable" : {

"ts"

: Timestamp(1536919477, 1),

"t" :

NumberLong(1)

},

"optimeDate" : ISODate("2018-09-14T10:04:37Z"),

"optimeDurableDate" :

ISODate("2018-09-14T10:04:37Z"),

"lastHeartbeat" :

ISODate("2018-09-14T10:04:37.952Z"),

"lastHeartbeatRecv" :

ISODate("2018-09-14T10:04:37.952Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "127.0.0.1:6666",

"syncSourceHost" : "127.0.0.1:6666",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 7

},

{

"_id" : 2,

"name" :

"127.0.0.1:8888",

"health" : 1,

"state" : 7,

"stateStr" :

"ARBITER",

"uptime" : 36,

"lastHeartbeat" :

ISODate("2018-09-14T10:04:38.811Z"),

"lastHeartbeatRecv" :

ISODate("2018-09-14T10:04:38.898Z"),

"pingMs" :

NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"configVersion" : 7

}

],

"ok"

: 1,

"operationTime" : Timestamp(1536919477, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1536919477, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

MongoDB Enterprise

OurReplica:PRIMARY>

MongoDB Enterprise OurReplica:PRIMARY> rs.conf();

{

"_id" : "OurReplica",

"version" : 7,

"protocolVersion" : NumberLong(1),

"writeConcernMajorityJournalDefault" : true,

"members" : [

{

"_id" : 0,

"host" :

"127.0.0.1:6666",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 1,

"host" : "127.0.0.1:7777",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 2,

"host" : "127.0.0.1:8888",

"arbiterOnly" : true,

"buildIndexes" : true,

"hidden" : false,

"priority" : 0,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

}

],

"settings" : {

"chainingAllowed" : true,

"heartbeatIntervalMillis" : 2000,

"heartbeatTimeoutSecs" : 10,

"electionTimeoutMillis" : 10000,

"catchUpTimeoutMillis" : -1,

"catchUpTakeoverDelayMillis" : 30000,

"getLastErrorModes" : {

},

"getLastErrorDefaults" : {

"w" : 1,

"wtimeout" : 0

},

"replicaSetId" :

ObjectId("5b9b61a67b1454a13fc7610a")

}

}

whom to Sync From?

MongoDB Enterprise OurReplica:PRIMARY>

rs.debug.getLastOpWritten()

{

"ts"

: Timestamp(1536923802, 1),

"t"

: NumberLong(3),

"h"

: NumberLong("3203467780775746843"),

"v" : 2,

"op" : "n",

"ns" : "",

"wall" :

ISODate("2018-09-14T11:16:42.846Z"),

"o" : {

"msg" : "periodic noop"

}

}

MongoDB Enterprise OurReplica:PRIMARY>

MongoDB Enterprise OurReplica:PRIMARY>

db.adminCommand({replSetGetStatus:1})

{

"set" : "OurReplica",

"date" : ISODate("2018-09-14T11:17:38.366Z"),

"myState" : 1,

"term" : NumberLong(3),

"syncingTo" : "",

"syncSourceHost"

: "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1536923852, 1),

"t" : NumberLong(3)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1536923852, 1),

"t" : NumberLong(3)

},

"appliedOpTime" : {

"ts" :

Timestamp(1536923852, 1),

"t" : NumberLong(3)

},

"durableOpTime" : {

"ts" : Timestamp(1536923852, 1),

"t" : NumberLong(3)

}

},

"lastStableCheckpointTimestamp" : Timestamp(1536923792, 1),

"members" : [

{

"_id" : 0,

"name" : "127.0.0.1:6666",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 1987,

"optime" : {

"ts"

: Timestamp(1536923852, 1),

"t" : NumberLong(3)

},

"optimeDate"

: ISODate("2018-09-14T11:17:32Z"),

"syncingTo"

: "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"electionTime" : Timestamp(1536921910, 1),

"electionDate" : ISODate("2018-09-14T10:45:10Z"),

"configVersion" : 14,

"self" : true,

"lastHeartbeatMessage"

: ""

},

{

"_id" : 1,

"name" : "127.0.0.1:7777",

"health" : 1,

"state" : 2,

"stateStr" :

"SECONDARY",

"uptime" : 296,

"optime" : {

"ts"

: Timestamp(1536923852, 1),

"t" :

NumberLong(3)

},

"optimeDurable" : {

"ts"

: Timestamp(1536923852, 1),

"t" :

NumberLong(3)

},

"optimeDate" : ISODate("2018-09-14T11:17:32Z"),

"optimeDurableDate" :

ISODate("2018-09-14T11:17:32Z"),

"lastHeartbeat" :

ISODate("2018-09-14T11:17:37.918Z"),

"lastHeartbeatRecv" :

ISODate("2018-09-14T11:17:37.917Z"),

"pingMs" :

NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "127.0.0.1:6666",

"syncSourceHost" : "127.0.0.1:6666",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 14

}

],

"ok"

: 1,

"operationTime" : Timestamp(1536923852, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1536923852, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

MongoDB Enterprise OurReplica:PRIMARY>

Forcing a New Election

The current primary server can be forced to step down using

the rs.stepDown() command.

Ø

When you

are simulating the impact of a primary failure, forcing the cluster to fail

over. This lets you test how your application responds in such a scenario.

Ø

When the

primary server needs to be offline. this is done for we either a maintenance

activity or for upgrading or to investigating the server.

Ø

When a

diagnostic process need to be run against the data structures.

MongoDB Enterprise OurReplica:PRIMARY> use admin

switched to db admin

MongoDB Enterprise OurReplica:PRIMARY> rs.stepDown()

2018-09-14T16:55:29.990+0530 E QUERY [js] Error: error doing query: failed:

network error while attempting to run command 'replSetStepDown' on host

'127.0.0.1:6666' :

DB.prototype.runCommand@src/mongo/shell/db.js:168:1

DB.prototype.adminCommand@src/mongo/shell/db.js:185:1

rs.stepDown@src/mongo/shell/utils.js:1398:12

@(shell):1:1

2018-09-14T16:55:29.991+0530 I NETWORK [js] trying reconnect to 127.0.0.1:6666 failed

2018-09-14T16:55:29.992+0530 I NETWORK [js] reconnect 127.0.0.1:6666 ok

MongoDB Enterprise OurReplica:SECONDARY>

MongoDB Enterprise OurReplica:SECONDARY> rs.status();

{

"set" : "OurReplica",

"date" : ISODate("2018-09-14T11:30:07.424Z"),

"myState" : 2,

"term" : NumberLong(4),

"syncingTo" : "127.0.0.1:7777",

"syncSourceHost" : "127.0.0.1:7777",

"syncSourceId" : 1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1536924599, 1),

"t" : NumberLong(4)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1536924599, 1),

"t" : NumberLong(4)

},

"appliedOpTime" : {

"ts" : Timestamp(1536924599, 1),

"t" : NumberLong(4)

},

"durableOpTime" : {

"ts" : Timestamp(1536924599, 1),

"t" : NumberLong(4)

}

},

"lastStableCheckpointTimestamp" : Timestamp(1536924569, 1),

"members" : [

{

"_id" : 0,

"name" :

"127.0.0.1:6666",

"health" : 1,

"state" : 2,

"stateStr" :

"SECONDARY",

"uptime" : 2736,

"optime" : {

"ts"

: Timestamp(1536924599, 1),

"t" : NumberLong(4)

},

"optimeDate"

: ISODate("2018-09-14T11:29:59Z"),

"syncingTo" :

"127.0.0.1:7777",

"syncSourceHost" : "127.0.0.1:7777",

"syncSourceId" : 1,

"infoMessage" : "",

"configVersion" : 18,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 1,

"name" : "127.0.0.1:7777",

"health" : 1,

"state" : 1,

"stateStr"

: "PRIMARY",

"uptime" : 453,

"optime" : {

"ts"

: Timestamp(1536924599, 1),

"t" :

NumberLong(4)

},

"optimeDurable" : {

"ts"

: Timestamp(1536924599, 1),

"t" :

NumberLong(4)

},

"optimeDate" : ISODate("2018-09-14T11:29:59Z"),

"optimeDurableDate" :

ISODate("2018-09-14T11:29:59Z"),

"lastHeartbeat" :

ISODate("2018-09-14T11:30:06.805Z"),

"lastHeartbeatRecv" : ISODate("2018-09-14T11:30:06.819Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" :

"",

"electionTime" : Timestamp(1536924338, 1),

"electionDate" : ISODate("2018-09-14T11:25:38Z"),

"configVersion" : 18

}

],

"ok"

: 1,

"operationTime" : Timestamp(1536924599, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1536924599, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" :

NumberLong(0)

}

}

}

MongoDB Enterprise OurReplica:SECONDARY>

MongoDB Enterprise OurReplica:SECONDARY>

MongoDB Enterprise OurReplica:PRIMARY>

MongoDB Enterprise OurReplica:PRIMARY>

MongoDB Enterprise OurReplica:PRIMARY> rs.stepDown();

2018-09-14T17:08:18.608+0530 E QUERY [js] Error: error doing query: failed: network error while attempting to run command 'replSetStepDown' on host '127.0.0.1:7777' :

DB.prototype.runCommand@src/mongo/shell/db.js:168:1

DB.prototype.adminCommand@src/mongo/shell/db.js:186:16

rs.stepDown@src/mongo/shell/utils.js:1398:12

@(shell):1:1

2018-09-14T17:08:18.609+0530 I NETWORK [js] trying reconnect to 127.0.0.1:7777 failed

2018-09-14T17:08:18.609+0530 I NETWORK [js] reconnect 127.0.0.1:7777 ok

MongoDB Enterprise OurReplica:SECONDARY>

MongoDB Enterprise OurReplica:SECONDARY> rs.status();

{

"set" : "OurReplica",

"date" : ISODate("2018-09-14T11:38:33.938Z"),

"myState" : 2,

"term" : NumberLong(5),

"syncingTo" : "127.0.0.1:6666",

"syncSourceHost" : "127.0.0.1:6666",

"syncSourceId" : 0,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1536925109, 1),

"t" : NumberLong(5)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1536925109, 1),

"t" : NumberLong(5)

},

"appliedOpTime" : {

"ts" : Timestamp(1536925109, 1),

"t" : NumberLong(5)

},

"durableOpTime" : {

"ts" : Timestamp(1536925109, 1),

"t" : NumberLong(5)

}

},

"lastStableCheckpointTimestamp" : Timestamp(1536925079, 1),

"members" : [

{

"_id" : 0,

"name" : "127.0.0.1:6666",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 961,

"optime" : {

"ts" : Timestamp(1536925109, 1),

"t" : NumberLong(5)

},

"optimeDurable" : {

"ts" : Timestamp(1536925109, 1),

"t" : NumberLong(5)

},

"optimeDate" : ISODate("2018-09-14T11:38:29Z"),

"optimeDurableDate" : ISODate("2018-09-14T11:38:29Z"),

"lastHeartbeat" : ISODate("2018-09-14T11:38:32.466Z"),

"lastHeartbeatRecv" : ISODate("2018-09-14T11:38:32.458Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"electionTime" : Timestamp(1536925108, 1),

"electionDate" : ISODate("2018-09-14T11:38:28Z"),

"configVersion" : 18

},

{

"_id" : 1,

"name" : "127.0.0.1:7777",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 3214,

"optime" : {

"ts" : Timestamp(1536925109, 1),

"t" : NumberLong(5)

},

"optimeDate" : ISODate("2018-09-14T11:38:29Z"),

"syncingTo" : "127.0.0.1:6666",

"syncSourceHost" : "127.0.0.1:6666",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 18,

"self" : true,

"lastHeartbeatMessage" : ""

}

],

"ok" : 1,

"operationTime" : Timestamp(1536925109, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1536925109, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

MongoDB Enterprise OurReplica:SECONDARY>

{

"set" : "OurReplica",

"date" : ISODate("2018-09-14T11:38:33.938Z"),

"myState" : 2,

"term" : NumberLong(5),

"syncingTo" : "127.0.0.1:6666",

"syncSourceHost" : "127.0.0.1:6666",

"syncSourceId" : 0,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1536925109, 1),

"t" : NumberLong(5)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1536925109, 1),

"t" : NumberLong(5)

},

"appliedOpTime" : {

"ts" : Timestamp(1536925109, 1),

"t" : NumberLong(5)

},

"durableOpTime" : {

"ts" : Timestamp(1536925109, 1),

"t" : NumberLong(5)

}

},

"lastStableCheckpointTimestamp" : Timestamp(1536925079, 1),

"members" : [

{

"_id" : 0,

"name" : "127.0.0.1:6666",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 961,

"optime" : {

"ts" : Timestamp(1536925109, 1),

"t" : NumberLong(5)

},

"optimeDurable" : {

"ts" : Timestamp(1536925109, 1),

"t" : NumberLong(5)

},

"optimeDate" : ISODate("2018-09-14T11:38:29Z"),

"optimeDurableDate" : ISODate("2018-09-14T11:38:29Z"),

"lastHeartbeat" : ISODate("2018-09-14T11:38:32.466Z"),

"lastHeartbeatRecv" : ISODate("2018-09-14T11:38:32.458Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"electionTime" : Timestamp(1536925108, 1),

"electionDate" : ISODate("2018-09-14T11:38:28Z"),

"configVersion" : 18

},

{

"_id" : 1,

"name" : "127.0.0.1:7777",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 3214,

"optime" : {

"ts" : Timestamp(1536925109, 1),

"t" : NumberLong(5)

},

"optimeDate" : ISODate("2018-09-14T11:38:29Z"),

"syncingTo" : "127.0.0.1:6666",

"syncSourceHost" : "127.0.0.1:6666",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 18,

"self" : true,

"lastHeartbeatMessage" : ""

}

],

"ok" : 1,

"operationTime" : Timestamp(1536925109, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1536925109, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

MongoDB Enterprise OurReplica:SECONDARY>